Hey there! Amazing that you are here. Grab a coffee, because we're diving into one of my favourite rabbit holes: quantum error correction (QEC).

Typically we treat QEC like a rigid architectural blueprint.

But what happens when the qubits don't stay put ?

What if we can ask, 'Where the ancillas at ?' and the Answer is: It’s complicated...

Enjoy the deep dive.

The dominant paradigm in quantum error correction is currently static. We treat QEC codes like rigid architectural blueprints, demanding that our quantum hardware conform to a fixed layout of data and ancillary qubits. This "code-first" approach, however, runs directly into the practical constraints of leading platforms like superconducting circuits, which are plagued by limited nearest-neighbor connectivity and crosstalk.

A new, more powerful philosophy is gaining traction. While there is no standard name for it yet, I will call it Architecture-Aware QEC.

Instead of forcing the hardware to fit the code, we choreograph the code to match the hardware. This approach trades spatial rigidity for temporal flexibility, dynamically reassigning the roles of qubits over time. Two recent papers, though vastly different in scale, perfectly illustrate this principle in action.

The Microcosm: Russia's 3-Qubit "Walking Ancilla"

A recent paper from the Russian Quantum Center provides a minimalist demonstration of this concept. Faced with a highly constrained device, just three linearly connected transmons, they implemented an error detection scheme not by adding more qubits, but by making the ancillary qubit "walk".

The Challenge: Perform stabiliser measurements on two data qubits (Q1, Q3) with an ancilla (Q2) that can only interact with one at a time.

The Dynamic Solution: The quantum state of the ancilla is dynamically transferred between physical qubits using SWAP operations, effectively moving its function across the chain to complete the stabiliser measurement.

Source: [1]

The Result: Despite the increased circuit depth, this dynamic scheme achieves a performance comparable to a conventional static-ancilla circuit. It proves that clever temporal scheduling can overcome severe spatial and connectivity limitations.

The Macro-Scale: Google's Dynamic Surface Codes

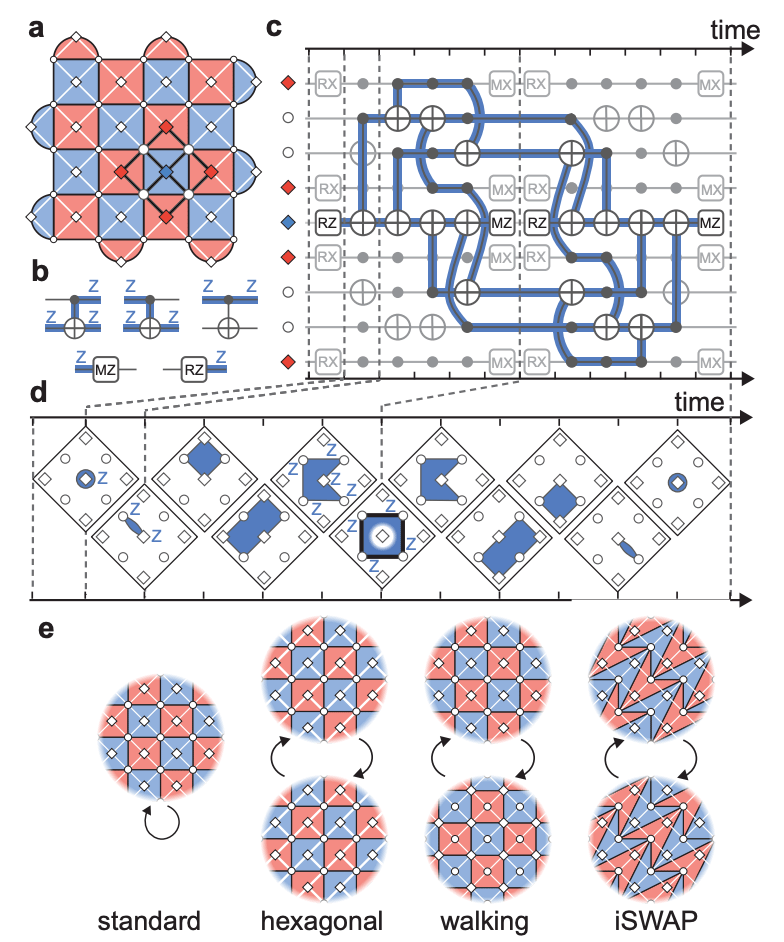

At the other end of the spectrum, Google's Quantum AI team has demonstrated that this dynamism isn't just a niche solution but a scalable strategy for fault-tolerance. On a large-scale processor, they implemented three different dynamic variations of the surface code, each tailored to solve a specific hardware challenge.

Source: [2]

Hexagonal Lattice Code: They embedded the surface code onto a hexagonal grid, a feat made possible by a time-dynamic circuit. This reduces the qubit connectivity requirement from four neighbours to three.

The dynamic part involves laterally shifting the detecting regions and using a time-reversed circuit every second cycle, creating a periodic deformation that preserves error detection while accommodating the new geometry.

Walking Surface Code: In this implementation, the roles of data and measure qubits are swapped every cycle. This prevents the accumulation of errors like leakage in specific "data" qubits, as every physical qubit is periodically reset. This built-in error management led to the suppression of long-time error correlations that plague static designs.

iSWAP-based Code: They demonstrated a surface code using iSWAP gates instead of the traditional CNOT/CZ gates.

This highlights the ultimate flexibility of the dynamic framework, which is robust enough to allow for substituting entire gate families without adding overhead, thus broadening the available hardware toolbox for fault-tolerance. In all cases, they achieved state-of-the-art error suppression when scaling from distance-3 to distance-5 codes, proving that dynamic circuits are a viable path to fault-tolerance.

Why This Is an Interesting Shift

These experiments are more than just clever engineering. They signal a move away from treating QEC as a static overlay and toward a deeply integrated, co-design methodology. This architecture-aware approach recognises that the most efficient path to fault-tolerance lies in the interplay between the algorithm and the device.

The Takeaway

The future of scalable quantum computing will likely not be built on rigid, one-size-fits-all codes. It will be built on an elegant and necessary choreography between quantum software and the physical hardware it runs on.

Until next time,

Michaela